Researchers at the National Institutes of Health (NIH) have found that while artificial intelligence (AI) tools can accurately diagnose genetic diseases using textbook-like descriptions, their accuracy drops significantly when analyzing health summaries written by patients themselves. These findings, published in the *American Journal of Human Genetics*, emphasize the need for further development of AI tools before they can be reliably used in healthcare settings for diagnosis and patient inquiries.

The study focused on a type of AI known as large language models, which are trained on vast amounts of text-based data. These models hold great potential in medicine due to their ability to process and respond to questions, paired with their often intuitive interfaces.

“We often overlook how much of medicine relies on language,” said Dr. Ben Solomon, senior author of the study and clinical director at the NIH’s National Human Genome Research Institute (NHGRI). “Electronic health records, doctor-patient conversations—these are all word-based. Large language models represent a significant advancement in AI, and their ability to analyze language in clinically useful ways could be transformative.”

The researchers tested 10 different large language models, including two recent versions of ChatGPT, by designing questions about 63 genetic conditions based on medical textbooks and other reference materials. These conditions ranged from common ones like sickle cell anemia and cystic fibrosis to rare genetic disorders. The questions were framed in a standardized format, such as, “I have X, Y, and Z symptoms. What’s the most likely genetic condition?”

The models’ performance varied widely, with initial accuracy rates between 21% and 90%. GPT-4, one of the latest versions of ChatGPT, was the most accurate. Generally, the models’ success correlated with their size—the amount of data they were trained on—with the largest models containing over a trillion parameters. Even the lower-performing models, after optimization, delivered more accurate results than traditional non-AI methods, such as standard Google searches.

The researchers tested the models in various ways, including replacing medical terms with more common language to reflect how patients or caregivers might describe symptoms. For example, instead of “macrocephaly,” the question would state the child has “a big head.” Although the models’ accuracy decreased with less technical language, 7 out of 10 models still outperformed Google searches.

“It’s essential that these tools are accessible to people without medical knowledge,” said Kendall Flaharty, an NHGRI postbaccalaureate fellow who led the study. “There are very few clinical geneticists worldwide, and in some areas, people have no access to them. AI tools could help answer some questions for these individuals.”

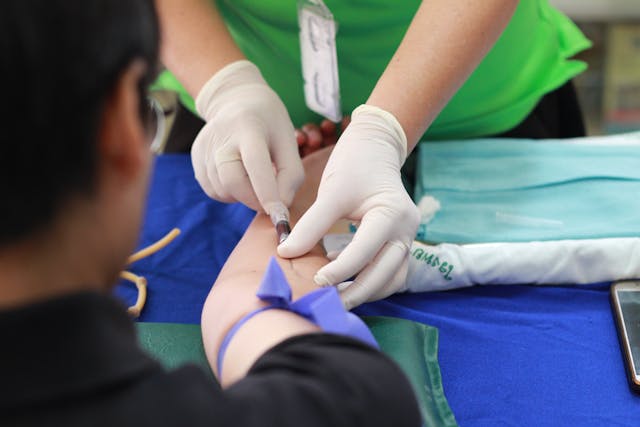

To evaluate how the models handled real patient data, the researchers asked patients at the NIH Clinical Center to write brief descriptions of their genetic conditions and symptoms. These write-ups were typically more varied in style and content than the standardized questions. When analyzing these patient descriptions, even the best-performing model correctly diagnosed only 21% of the cases, with some models performing as poorly as 1%.

The researchers anticipated these challenges since the NIH Clinical Center often treats patients with extremely rare conditions that the models might not be familiar with. However, when the researchers rewrote the patient cases into standardized questions, the models’ accuracy improved, suggesting that the variability in patient language was a significant obstacle.

“To make these models clinically useful, we need more diverse data,” Dr. Solomon said. “This includes not only all known medical conditions but also variations in age, race, gender, cultural background, and more, so the models can understand how different people describe their conditions.”

This study underscores the current limitations of large language models and highlights the ongoing need for human oversight when applying AI in healthcare.

“These technologies are already being introduced into clinical settings,” Dr. Solomon added. “The critical questions now are not if clinicians will use AI, but where and how they should use it, and where it’s best not to rely on AI to ensure the highest quality of patient care.”

- Press release – National Human Genome Research Institute